Uncertainty Estimation Methods in Large Language Models - A Taxonomy

Introduction

Reliability and trustworthiness are paramount challenges in the deployment of Large Language Models (LLMs). A critical component of reliable AI is Uncertainty Estimation, which aims to quantify the model’s confidence in its own generations. This post provides a systematic taxonomy of current uncertainty estimation methods, synthesizing key literature including recent surveys such as A Survey on Uncertainty Quantification of Large Language Models: Taxonomy, Open Research Challenges, and Future Directions and A Survey of Uncertainty Estimation Methods on Large Language Models.

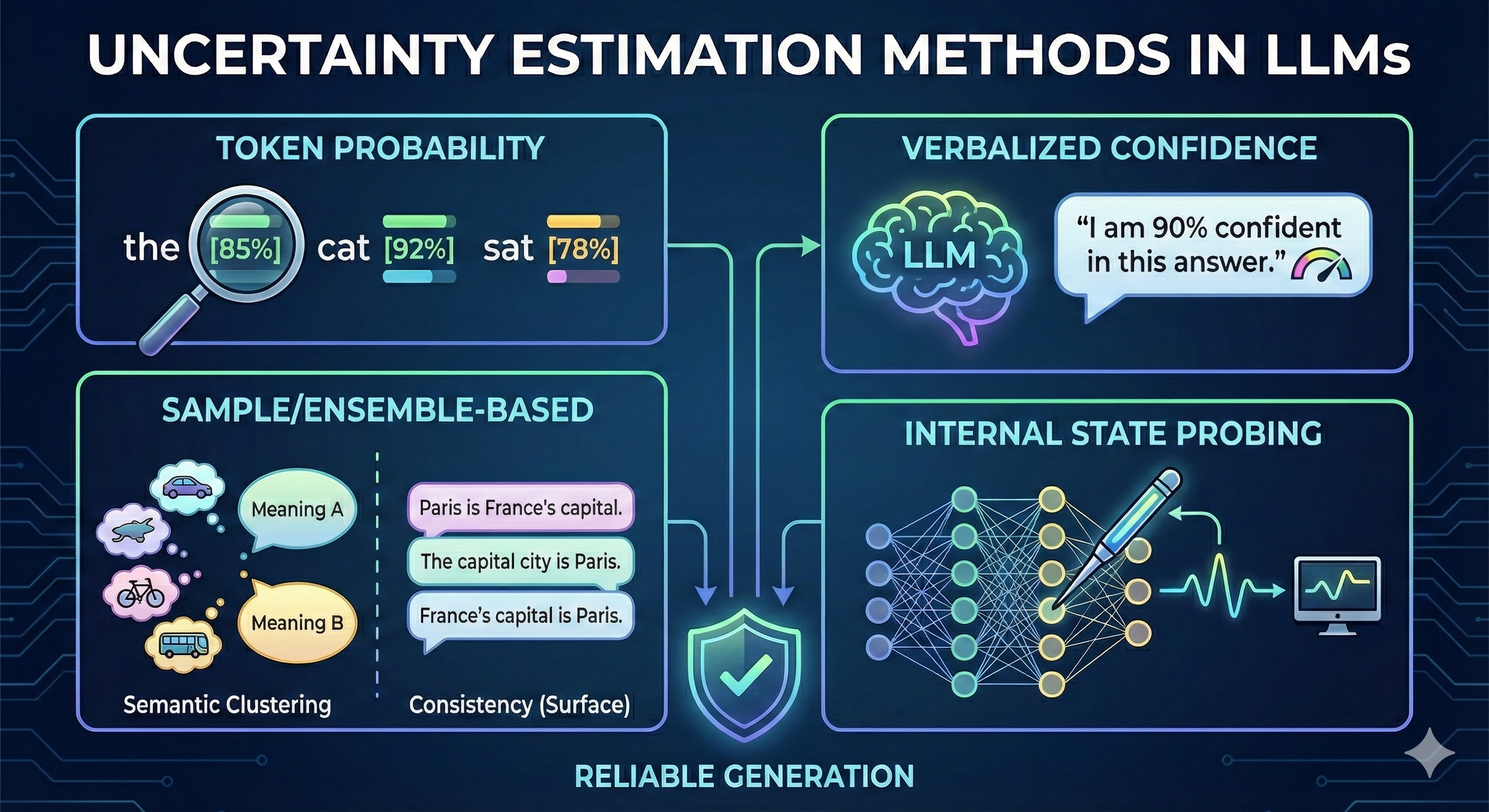

The methods are categorized into five primary distinct approaches: Token Probability, Verbalized Confidence, Consistency/Ensemble-based, Structural/Graph-based, and Hidden State Probing.

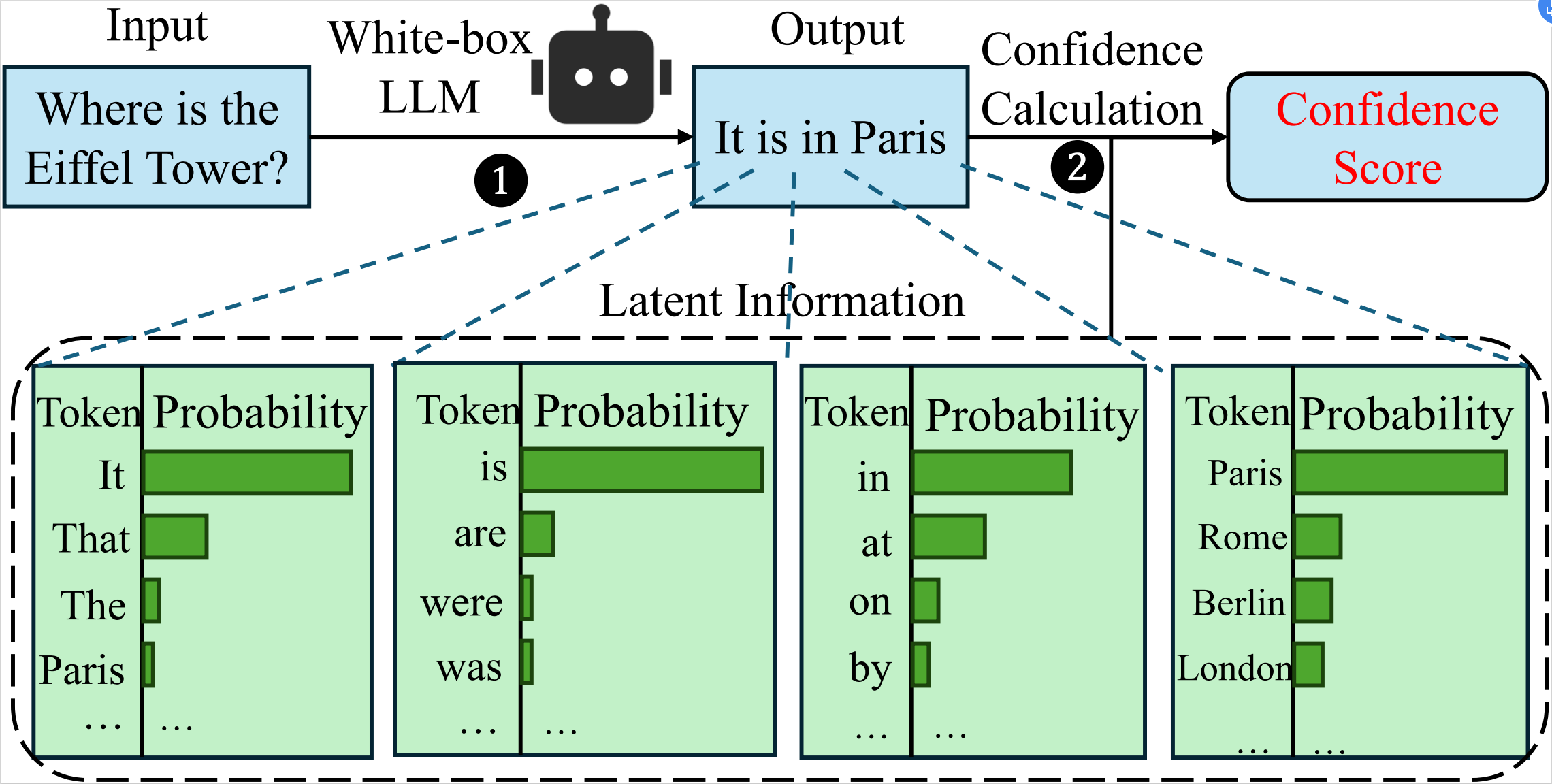

1. Logit-Based and Probability-Derived Methods

This category utilizes the internal probability distribution of the model (white-box access) to derive uncertainty scores. These methods rely on the output logits of tokens or the entire sequence.

Mechanism:

- Metrics: Calculation of statistics such as Average/Max Probability or Average/Max Entropy across the generated token sequence.

- Validation: Content correctness is often validated using similarity metrics (ExactMatch, BLEU, RougeL, Jaccard Index, BERTScore, Cosine Similarity) where a threshold (e.g., score > 0.5) implies correctness.

Limitations:

- Requires white-box access to the model (access to logits).

- Suffers from miscalibration (models are often confident but wrong).

Key Literature:

- How Can We Know When Language Models Know? On the Calibration of Language Models for Question Answering

- Method: Uses confidence scores derived from the average/max negative log-likelihood probability and the entropy of response tokens.

- Language Models (Mostly) Know What They Know

- Method: Evaluates the probability of the statement being true, denoted as $P(\text{true})$.

- Uncertainty Estimation in Autoregressive Structured Prediction

- Method: Introduces Length-Normalized (LN) methods to mitigate the bias where longer sequences yield lower joint probabilities.

- MARS: Meaning-Aware Response Scoring for Uncertainty Estimation in Generative LLMs

- Method: Improves upon standard length normalization by assigning weights to each token via BERT embeddings to capture semantic importance.

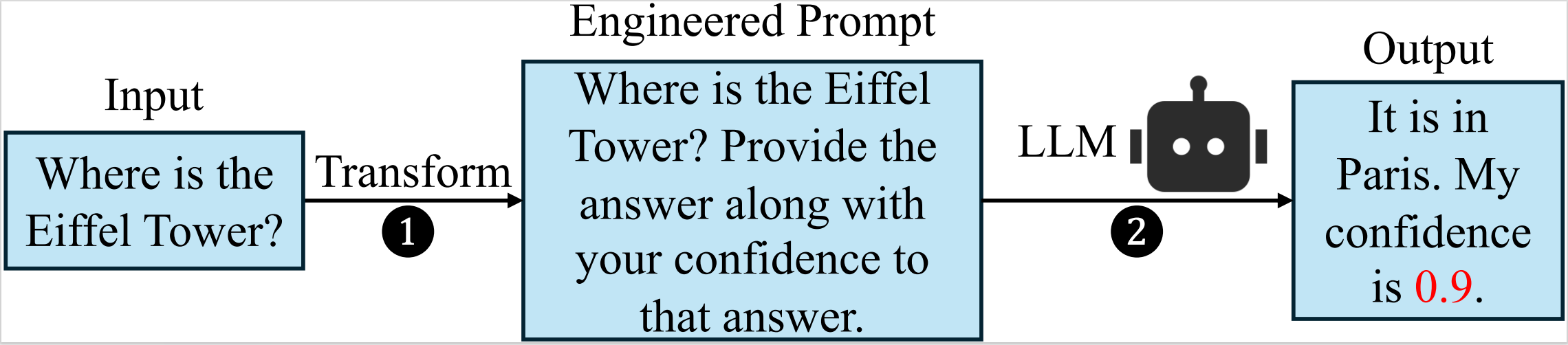

2. Verbalized Confidence (Prompt-Based)

These methods treat the LLM as a black box, leveraging prompt engineering to explicitly query the model for its confidence level or to generate reasoning paths (Chain-of-Thought) regarding its certainty.

Mechanism:

- Direct Query: Prompting the model to output a numerical score or a linguistic confidence marker along with the answer.

- Framework: Often involves multi-stage prompting (Answer generation $\rightarrow$ Confidence elicitation).

Limitations:

- Performance is heavily dependent on prompt design.

- It is challenging to improve the separation between correct and incorrect predictions solely through prompting without fine-tuning.

Key Literature:

- Teaching Models to Express Their Uncertainty in Words

- Method: GPT-3 is prompted to generate both the answer and a verbalized confidence level simultaneously.

- Just Ask for Calibration: Strategies for Eliciting Calibrated Confidence Scores from Language Models Fine-Tuned with Human Feedback

- Method: Explores various strategies including Label Probability, “IsTrue” Probability, and Verbalized methods (1-step vs. 2-step, Top-K, CoT).

- Can LLMs Express Their Uncertainty? An Empirical Evaluation of Confidence Elicitation in LLMs

- Method: Proposes a 3-stage framework for black-box LLMs: (1) Prompting for confidence, (2) Sampling diverse responses, (3) Aggregation (ranking/averaging).

- Quantifying Uncertainty in Natural Language Explanations of Large Language Models

- Method: Measures uncertainty in reasoning steps. Uses Token Importance Scoring (via sample probing) and Step-wise CoT Confidence (via model probing).

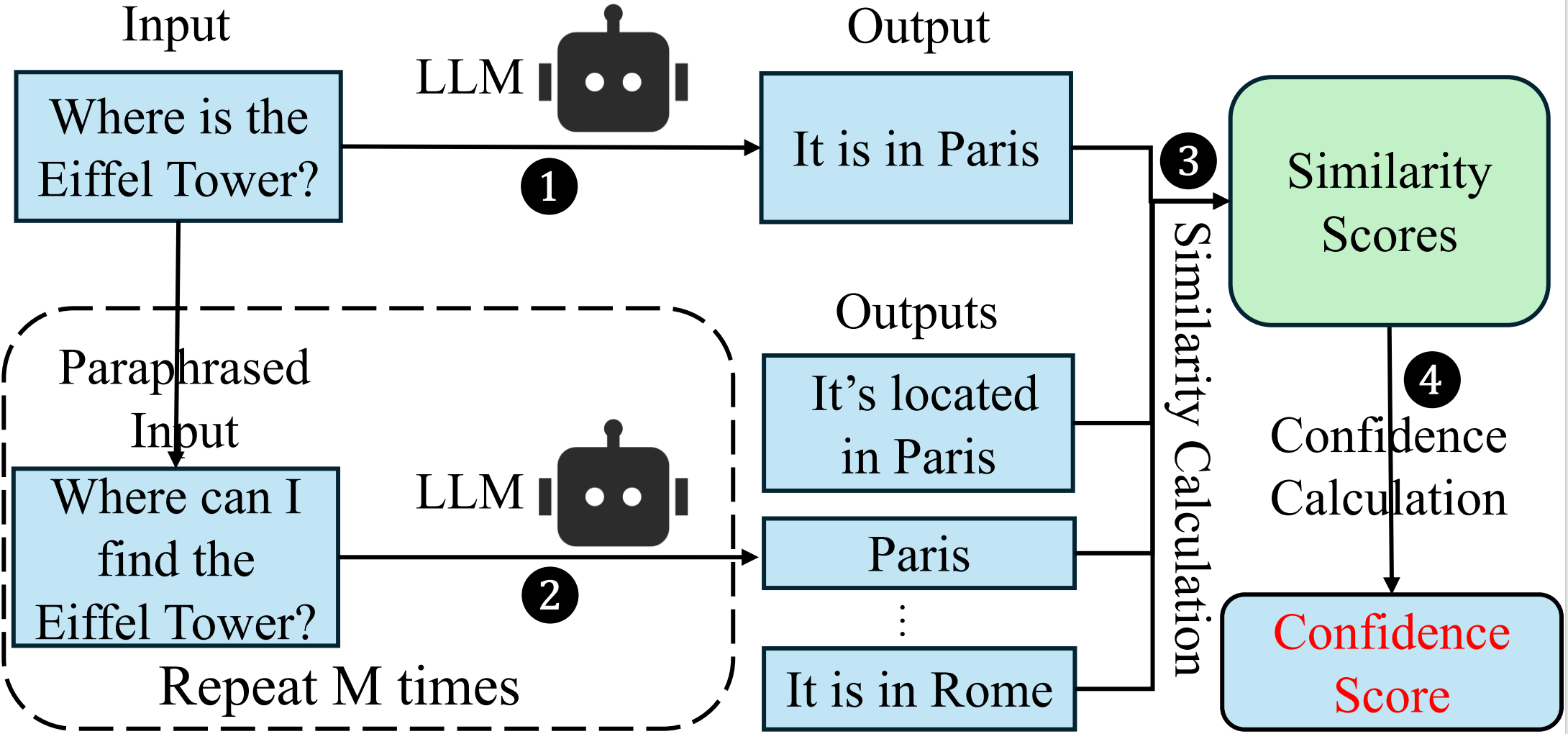

3. Consistency and Ensemble-Based Methods

This approach is based on the intuition that if a model is confident, multiple sampled generations should be consistent. If the model is hallucinating, the generations will likely diverge.

Mechanism:

- Sampling: Generate multiple responses for the same input (or perturbed inputs).

- Aggregation: Measure consistency via surface-level similarity (lexical overlap) or semantic-level similarity (clustering/embedding distance).

Limitations:

- High computational cost due to multiple generation passes.

- Requires complex aggregation logic (clustering or an external judge model).

3.1 Consistency without Semantic Clustering (Surface Level)

Focuses on lexical consistency or input perturbations.

Key Literature:

- Decomposing Uncertainty for Large Language Models through Input Clarification Ensembling

- Method: Generates clarifications for potentially ambiguous inputs and ensembles the results.

- Look Before You Leap: An Exploratory Study of Uncertainty Measurement for Large Language Models

- SPUQ: Perturbation-Based Uncertainty Quantification for Large Language Models

- Generating with Confidence: Uncertainty Quantification for Black-box Large Language Models

3.2 Semantic Clustering Uncertainty (Deep Level)

Focuses on the meaning of the generated text. Different phrasings with the same meaning are grouped together.

Key Literature:

- Semantic Uncertainty: Linguistic Invariances for Uncertainty Estimation in Natural Language Generation [ICLR 2023]

- Method (SE): Introduces Semantic Entropy, grouping generations by meaning (using NLI) before calculating entropy.

- Detecting Hallucinations in Large Language Models Using Semantic Entropy [Nature 2024]

- Method (DSE): A refined Dynamic Semantic Entropy approach for hallucination detection.

- Semantic Entropy Probes: Robust and Cheap Hallucination Detection in LLMs [ICLR 2025 Submission]

- Method (SeP): A probing method designed to approximate semantic entropy more efficiently.

- Shifting Attention to Relevance: Towards the Predictive Uncertainty Quantification of Free-Form Large Language Models [ICLR 2024 Submission]

- Method (SAR): Focuses on relevance weighting during uncertainty quantification.

- Kernel Language Entropy: Fine-grained Uncertainty Quantification for LLMs from Semantic Similarities [NeurIPS 2024]

- Method (KLE): Uses kernel methods to estimate entropy based on semantic similarity matrices.

- INSIDE: LLMs’ Internal States Retain the Power of Hallucination Detection [ICLR 2024 Poster]

- Method: Analyzes the Eigenvalues of internal representations to detect hallucinations.

- Semantically Diverse Language Generation for Uncertainty Estimation in Language Models [ICLR 2025 Poster]

- Method (SDLG): Encourages diversity during generation to better estimate the uncertainty boundary.

- Beyond Semantic Entropy: Boosting LLM Uncertainty Quantification with Pairwise Semantic Similarity

- Method (SNNE): Enhances semantic entropy by leveraging pairwise similarity measures.

4. Structural and Graph-Enhanced Methods

A relatively novel category that abstracts uncertainty from the syntactic or structural relationships within the generated text.

Key Literature:

- GENUINE: Graph Enhanced Multi-level Uncertainty Estimation for Large Language Models [EMNLP 2025]

- Method: Performs lexical and syntactic analysis on the generated answer to construct a graph. Uncertainty is derived from the structural properties and relations within this graph.

5. Hidden State Supervised Training (Probing)

This approach involves training a lightweight classifier (probe) on the model’s internal hidden states (activations) to predict correctness or uncertainty.

Mechanism:

- Feature Extraction: Extracts activation vectors from specific layers.

- Supervision: Trains a linear classifier (e.g., Logistic Regression or SVM) to distinguish between correct and incorrect generations.

Key Literature:

- Uncertainty Estimation and Quantification for LLMs: A Simple Supervised Approach

- Method: Extracts features from hidden states and uses supervised learning to train a linear classifier for uncertainty prediction.

Enjoy Reading This Article?

Here are some more articles you might like to read next: